Textural synthesis experiments from my first year at Georgia Tech’s Art and AI Vertically Integrated Project. My proposal to use GANs for rapid, art-directed textural synthesis on 3D models was selected as one of the primary projects pursued by the group my first semester. Working on my idea under the guidance of upperclassmen, we prototyped an end-to-end texturing workflow that utilizes Golnaz Ghiasi’s Style Transfer Neural Network and Antoine Mehdi’s Normal Map Generator. All renders below were created by me.

Problem: When directing artistic endeavors in an experimental 3D graphics project, searching for unique, tileable textures catered to the project’s needs is one of the most exhausting aspects of the process. While commonplace physical textures such as metals or wood are accessible in most material libraries, more abstract, imaginative textures must be created from scratch. Though programs like Substance Painter cut out these steps by allowing artists to paint directly onto models, the UVs must be seamlessly unwrapped, which is a tedious task.

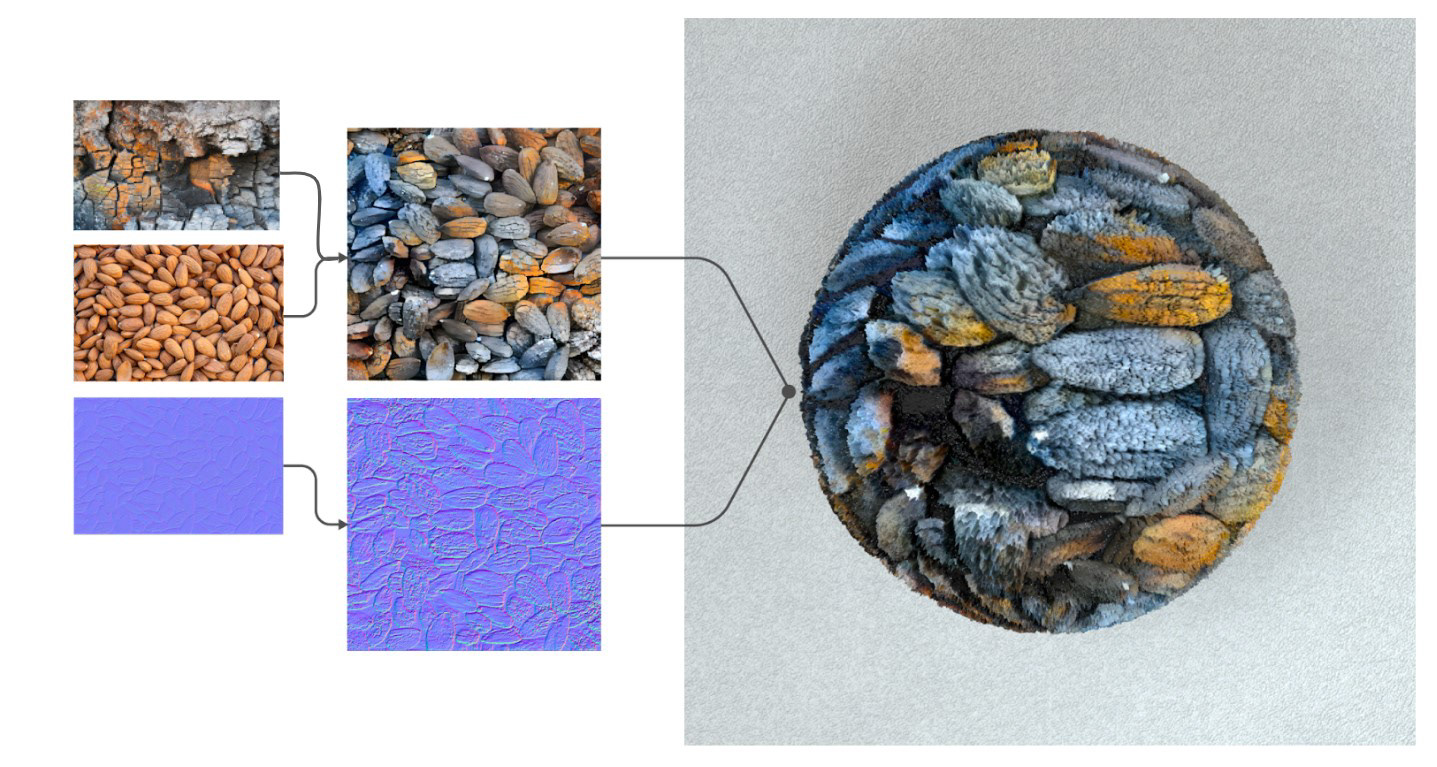

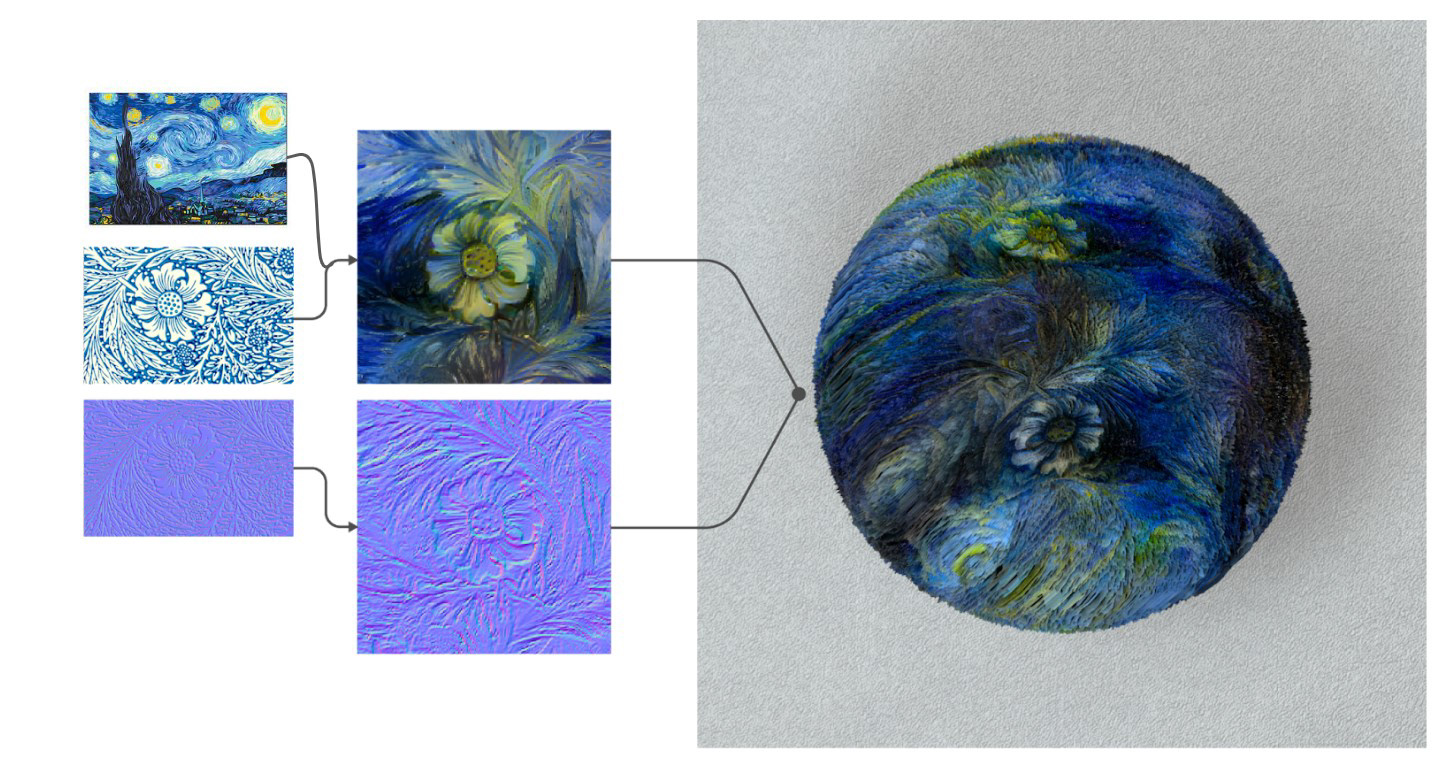

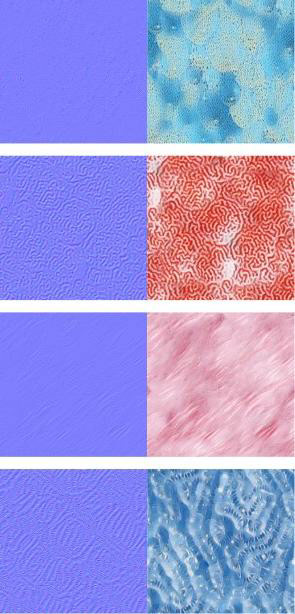

Solution: We assembled a tool on Google Colab that can generate a set of artistic RGB and bump 3D textures in a few clicks, fully synthesized using GANs. This enables artists to experiment with new textures in a simple manner. This tool generates textures using a starting image and a transfer image reflecting the artist’s intended style. A normal map is created based on the resulting image and the new texture set is then available for download. Unfortunately, our final prototype was unable to implement tilability, a necessary improvement for this tool to be practical.

Art-Direction Test:

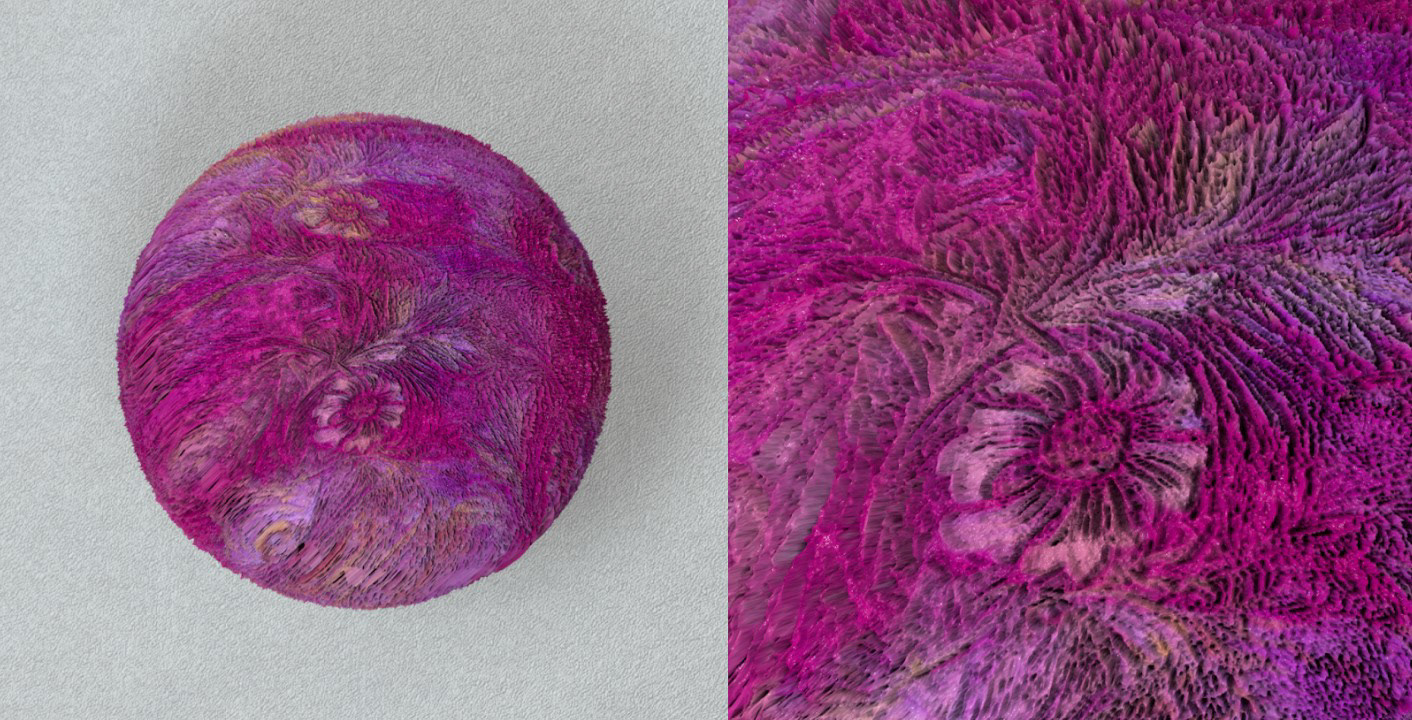

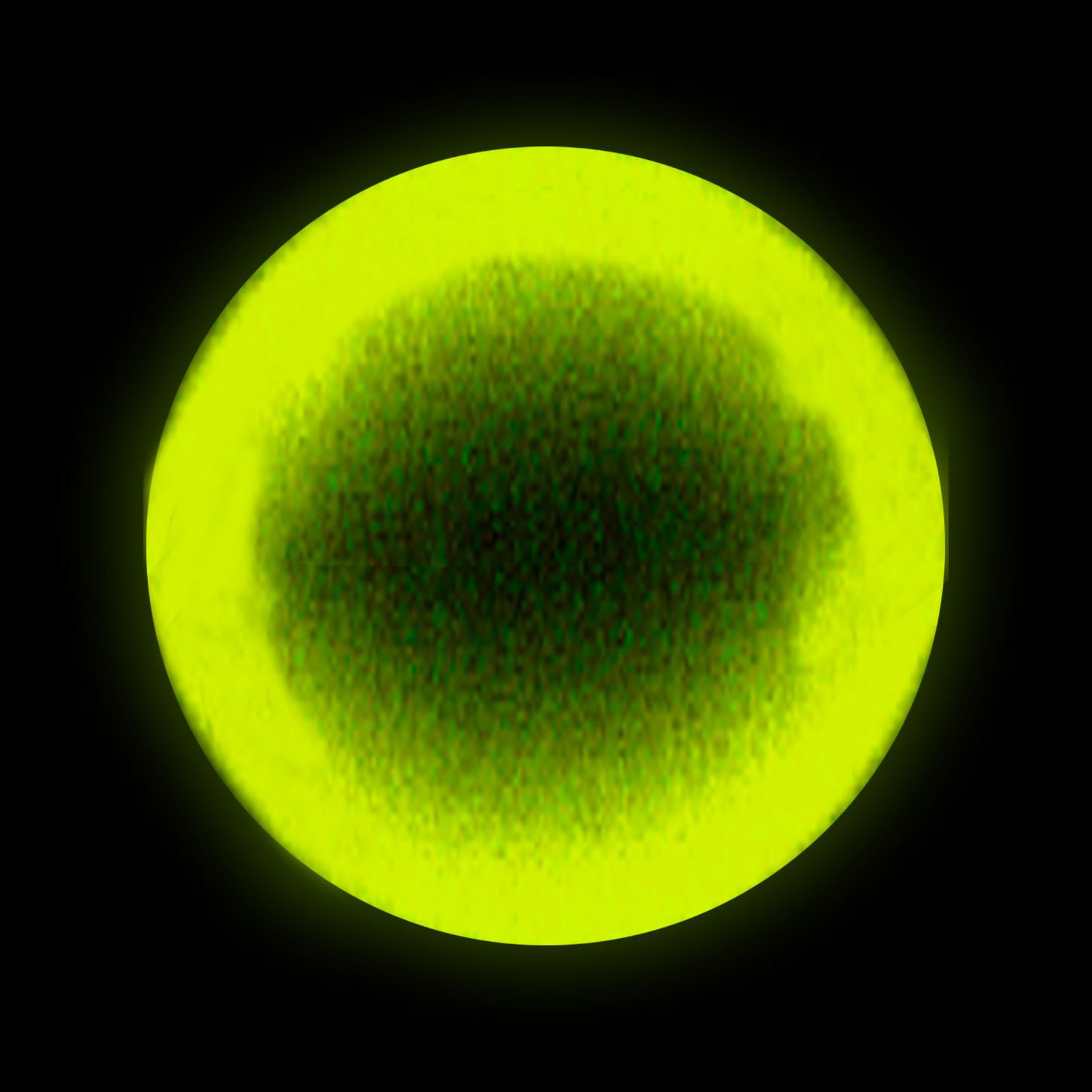

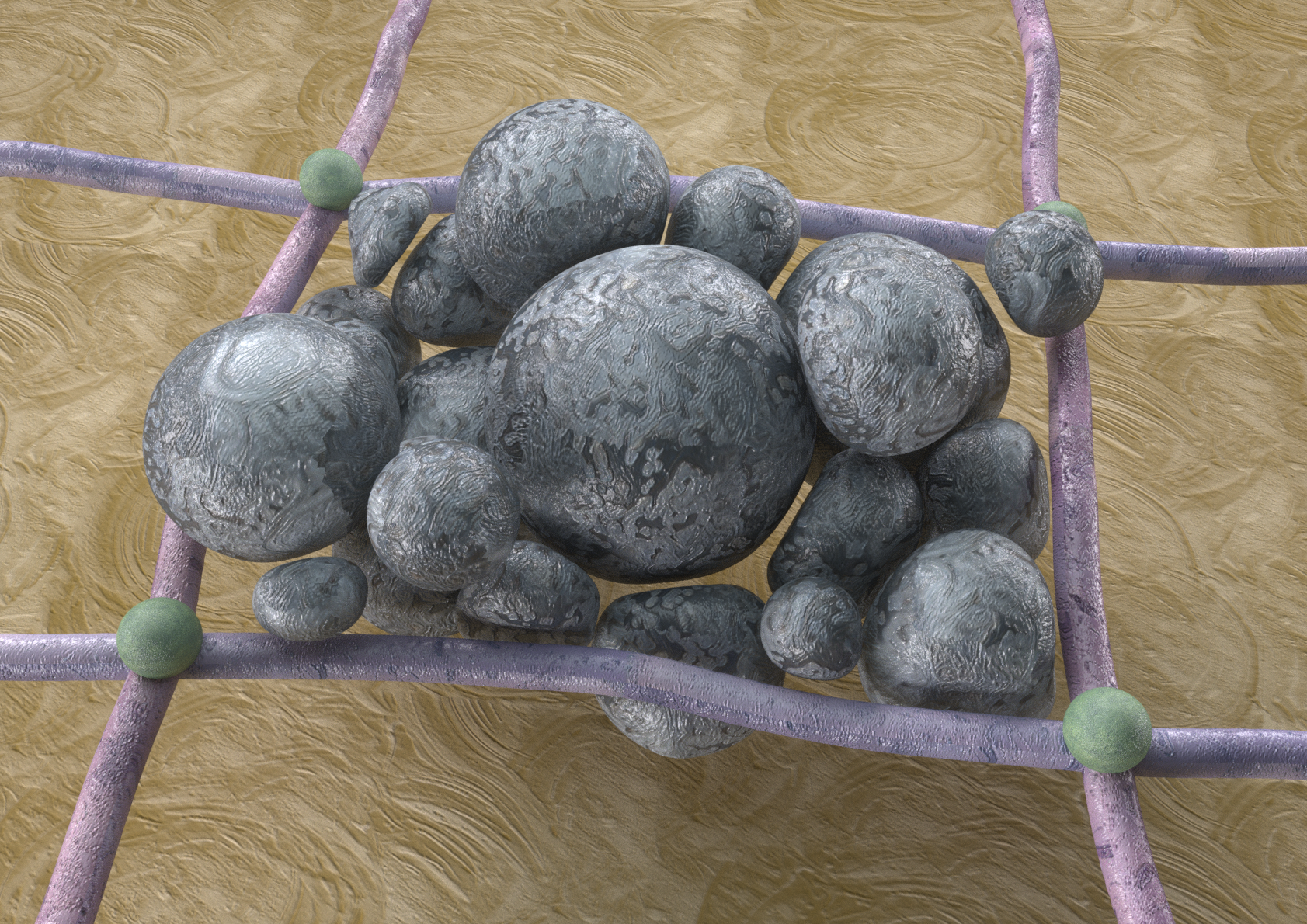

Since our tool could let us transform images of whatever we wanted into usable, interesting textures, I was curious if it could be used to creatively communicate hard-to-visualize research on campus. I got a lovely opportunity to collaborate with mechanical engineering researcher Dongjing He to create an art project representing his research via the Science.Art.Wonder initiative. Since his work involves soft-body mechanics, I used a soft-body simulation to represent a polymer network.

Completed S.A.W Animation

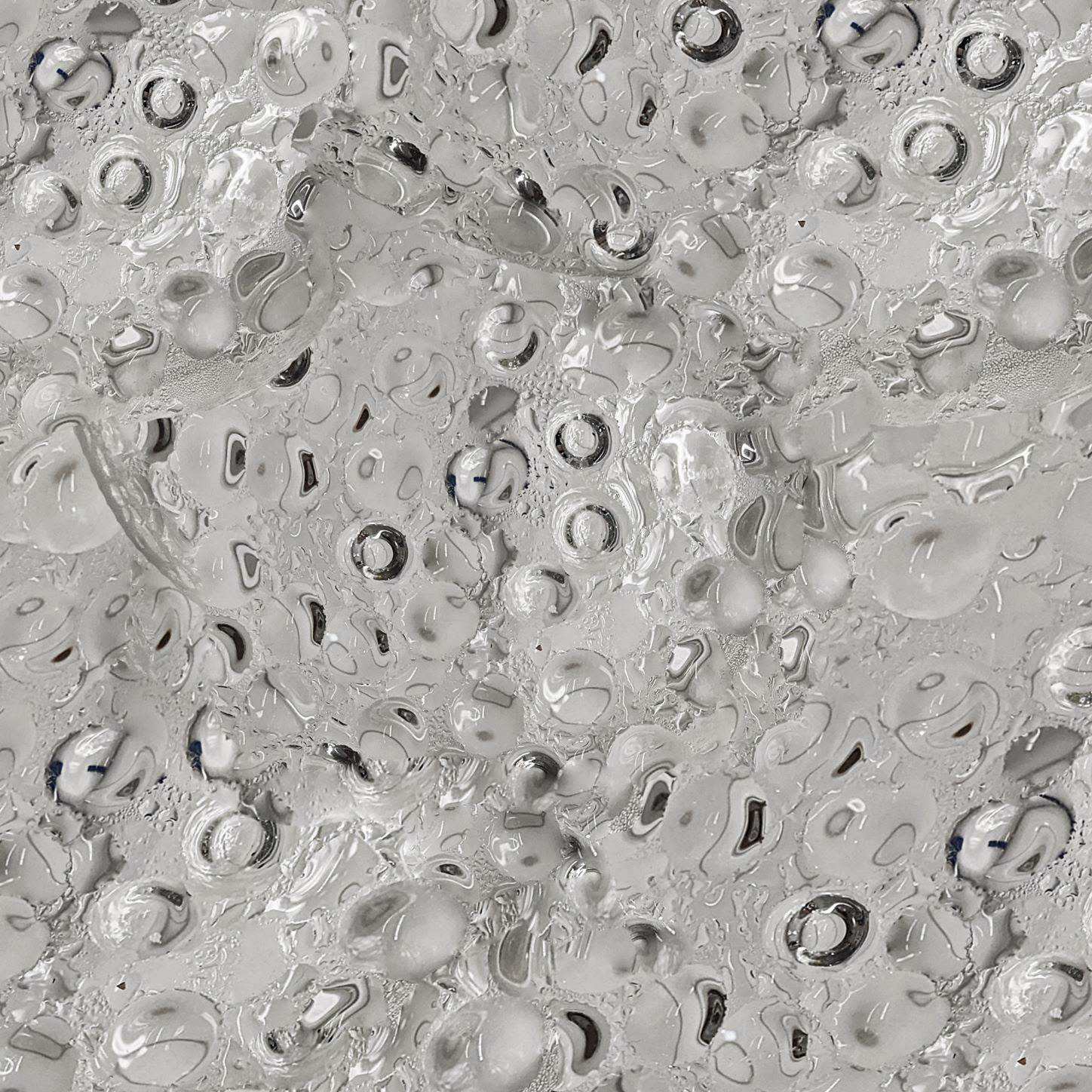

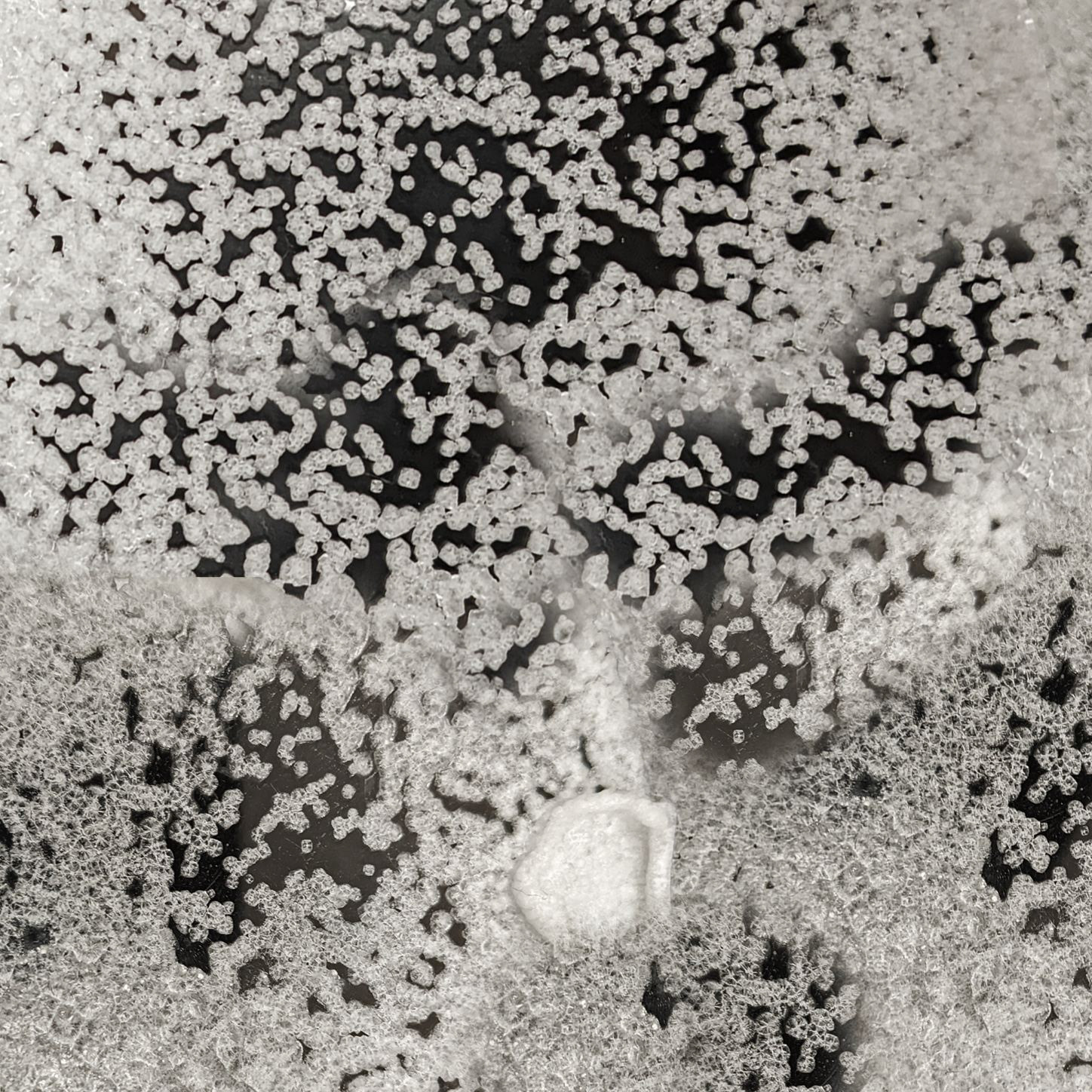

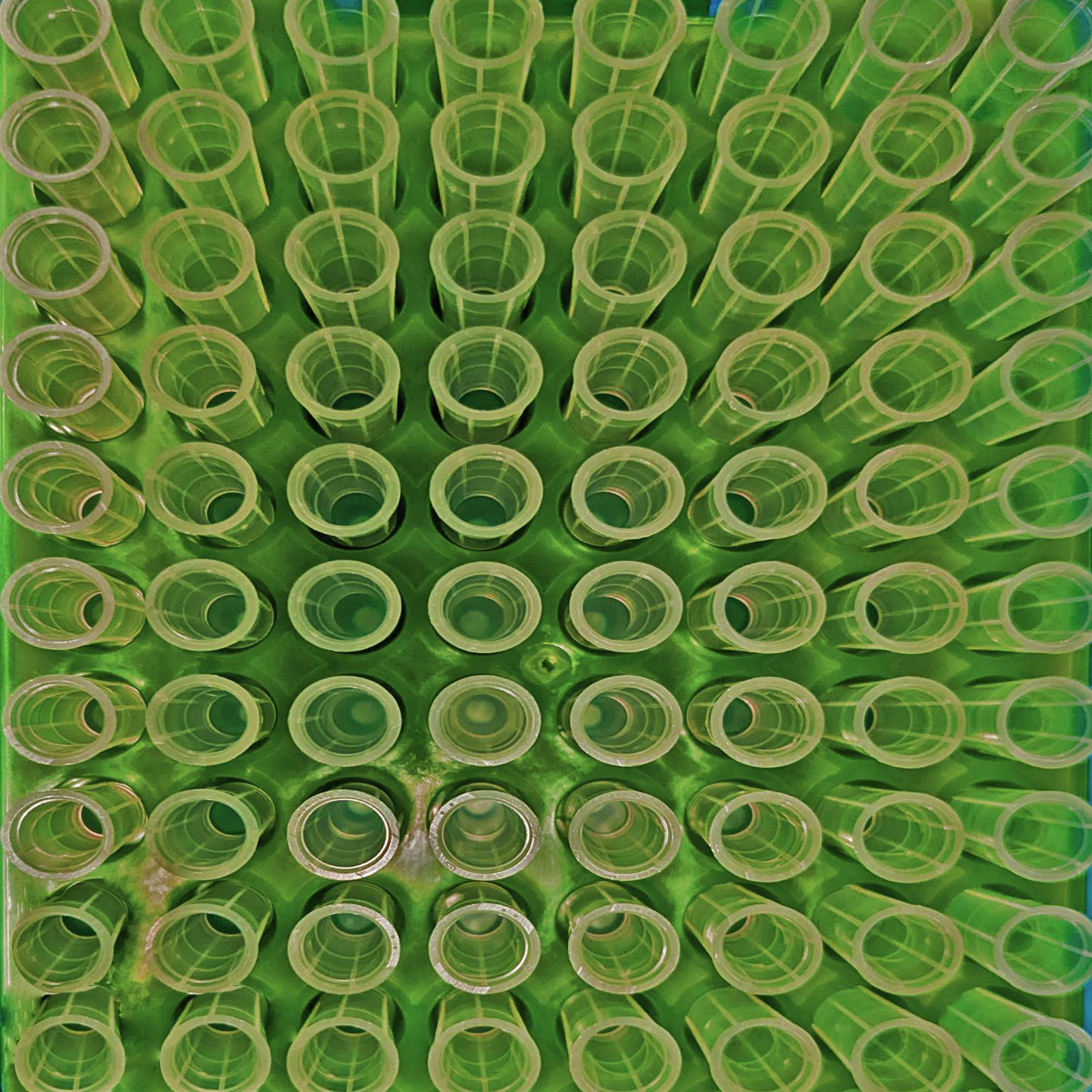

Even though I finished the animation prior to this project, I decided to revisit He’s research to see if I could translate his work into usable textures. I first took a bunch of macro photos of the gels, equipment and microscope results in his lab:

With the help of the AI models implemented by our tool, I was able to convert these images into an interesting set of textures!

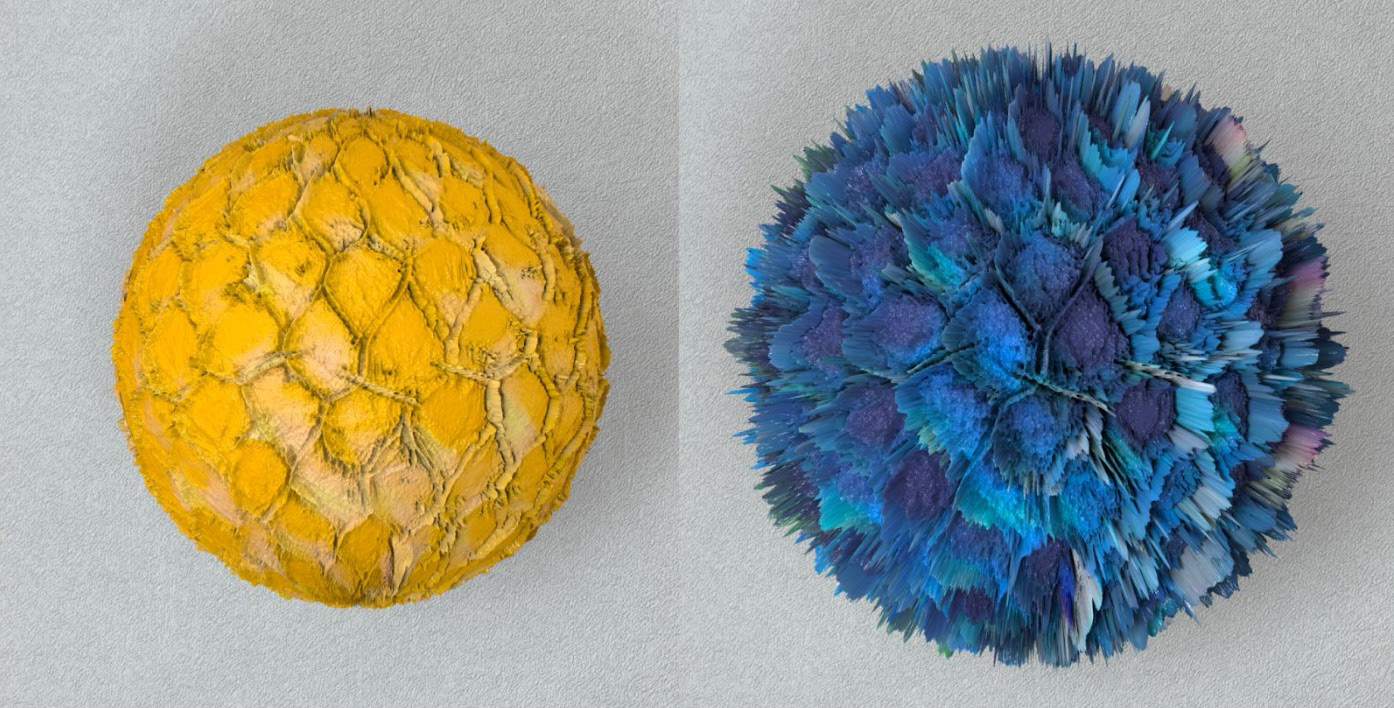

Revised Style Frames (using new textures)

---

Style Transfer Neural Network, Normal Map Generator, Cinema 4D, Octane Render